Introduction

A common myth believed by many inexperienced producers and engineers is that poorly recorded audio can be ‘fixed in the mix’. Those who have tried ‘mix-fixing’ will generally agree that there is no substitute for quality audio recorded at source. This means using the correct microphone for the job, meticulously adjusting and reviewing placement techniques to get the desired sound and, in many cases, use of sonically pleasing analogue equipment to add character to the sound before the signal is forwarded for recording.

Most producers these days use digital recording techniques, so, unless advanced mixdown effects are an integral element of the artistic piece, it generally makes sense to get as near to the final desired sound as possible before digitisation takes place. Many producers, however, are still unaware of the scientific impact of over-processing audio at the waveform level – knowledge of such consequences could persuade some to re-evaluate their methods.

This article describes some common equalisation (EQ) techniques which are regularly employed and discusses their impact on the overall reproduced sound. For example, simple EQ shaping can be used to effectively add ‘punch’ to a bass sound or add ‘air’ to a vocal track. However, if this type of EQ application is over used or incorrectly implemented, there can be a detrimental impact on the final sound quality.

Published recommendations from producers and industry experts are used to highlight ways to avoid the incorrect and over-use of both analogue and digital equalisation. Experienced and successful producers are generally accustomed to the positive and adverse sonic effects of EQ used in recording and mixdown. This article, however, extends further to give scientific explanations as to what effects can be heard and seen at the waveform level when equalisation is incorrectly implemented.

Everything needs a little EQ, doesn’t it?

To EQ or to not EQ?

Equalisation is used to enhance or reduce the effects of specific frequencies present in a section of audio. This is obviously very useful for making sounds stand out from each other in a complete mix and for reducing the effect of unwanted noise or resonant frequencies developed by the recording environment. However, EQ and filtering, by its very nature, adds noise, delay and phase distortion to a sound (Hood, 1999, p237) (Mellor, 1995). Digital filtering also introduces quantisation and truncation noise due to the heavy multiplicative processing involved (Miller, 2005).

Many producers would agree that avoiding the use of EQ by getting the recorded sound correct in the first place is a much better solution:

Equalisation should be employed only after all efforts have been made to obtain the best sound at source (White, 2003, p68).

Producer Jim Abbiss explains that unnecessary EQ can be avoided by using more than one microphone to record an audio source. The balance of the chosen microphones can be adjusted to achieve the desired sonic and spectral attributes at mixdown.

For guitars I generally start with an SM57 and a Royer 121 together, placed slightly off-centre. It’s the perfect combination: if I want to brighten the sound, I’ll turn up the 57, if I want warmth, I’ll turn up the Royer. This rather than EQ things. (Jim Abbiss quoted by Tingen, 2006)

Over-use of EQ

In many cases, over-use of EQ at mixdown is owing to time constraints and oversights at the recording stage. Engineers can sometimes be so focused on capturing the audio accurately and efficiently, that they overlook the fact that during the recording process the sound is shaped or defined more than it ever will be at mixdown.

The main contribution to the sonic makeup of any recorded sound is the environment in which the audio is recorded. The main components being

– The quality of the sound projecting from the music source

– The quality and suitability of the microphone used for capturing the audio

– The location of the recording and the acoustic characteristics of the ambient environment

(Huber & Runstein, 2005, p 451).

So, for example, if a talented singer is recorded with a suitable quality microphone in an acoustically sympathetic environment, there should be no essential need to use EQ in mixdown. Sometimes projects are limited by the available microphones and recording locations, in which case considerable use of EQ at mixdown could be the only solution. But the best engineers and producers will look to solve these problems practically, rather than relying on EQ to cover up any inadequacies. In many cases this might require trying many different microphones to find the most complimentary result, or re-positioning within the room to avoid problem frequencies and to achieve the desired reverb characteristic.

Unfortunately, the availability of modern EQ systems and the apathy of some recording engineers lead to EQ being used as a solution, which can have adverse consequences. This point is argued persistently by producer Joe Boyd (2006, p204):

These days most engineers confronted with a displeasing sound reach for the knobs on the console and tweak the high, mid or low frequencies. When that process is inflicted on more and more tracks of a multi-channel recording the sound passes through dozens of transistors, resulting in a narrower, more confined sound. With the added limitations of digital sound, you end up with a bright and shiny, thin and two-dimensional recording. To my ears, anyway.

Over-use of digital EQ can have similar effects with added delay and phase distortion. This ultimately lends itself to a less cohesive sound and the possibility of introducing comb filtering (as discussed in Section 3.1 below).

Spectral spacing of instruments by use of EQ

One of the many uses for analogue or digital EQ is to separate instruments within a mixed section of audio. For example, if there are two guitarists playing similar sections, then the guitars can be separated in the mix by adding a tonal boost to each instrument at different frequencies. This can help to balance the overall spectral content of the mix by ensuring that the two guitars are not occupying the same frequency bands. Gibson (2005, p. 196), however, states that this should be done very carefully with equalisation:

EQ will work, but it will very quickly make your sound unnatural. It is really easy to be seduced by the clarity you have achieved and forget to notice that your instrument now sounds like it is coming out of a megaphone.

It could be argued that a comparable method is to ensure that the two guitarists use different guitar setups to achieve the same spectral separation effect. This requires a little more analysis of the sound prior to recording, but the benefit can be well balanced, quality audio which requires less processing at mixdown. The possibilities for tonal makeup with a guitar setup are endless, with multiple body, pickup and amp combinations. It is a wasted opportunity not to consider this approach and optimise the two instruments within the context of the song during recording.

Common EQ issues

Delay and phase distortion

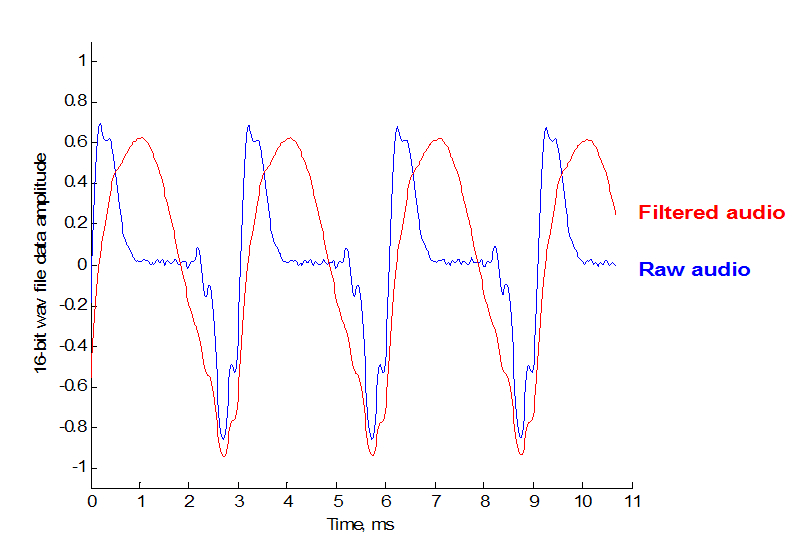

Standard analogue and digital filters and equalisation systems add a small time delay to the processed audio. This is because most analogue and digital filters rely on recursive data feedback, so the desired output of the filter does not occur immediately. Figure 1 shows a raw and filtered audio signal. It can be seen that the zero crossing points in the processed audio (at approximately 3, 6 and 9 ms) lag behind that of the raw data. A further issue is that the output of a filter is not usually delayed by a constant amount across the entire frequency spectrum, which “can lead to a loss of depth and clarity” (Walker, 2006).

This is one reason why certain filters and EQ systems sound subjectively better than others. The inaccuracies of some filters are harsher than others, and some actually give a perceived improvement. In some cases the inherent delay and phase distortion of a filter can add a subjective improvement to the sound – this effect is particularly associated with classic analogue filters.

Advanced software filters have been developed which can achieve linear phase filtering. As discussed by Walker (2006), transients and harmonics emerge from linear phase filters with “much greater transparency and detail”. Unfortunately there is a price to pay; linear phase EQ filters are prone to ring at their own resonant frequency and undesired pre-echo artefacts can be generated. These types of EQ filters also require detailed mathematical computation which is exceptionally heavy on computer processing load and can also result in quantisation and truncation errors, as discussed by Miller (2005).

Figure 1

A technique for reducing the phase distortion effects of non-linear EQ is ‘feathering’ as described by Owsinski (2000, p17). An example of feathering is described by Owsinski as…

…instead of adding +3dB at 100Hz, you add +1.5dB at 100Hz and 0.5dB at 80Hz and 120 Hz. This lowers the phase shift brought about when using analogue equalisers and results in a smoother sound.

A small amount of phase error or phase distortion is usually acceptable on an individual audio track. However, when many audio sources are equalised, processed – perhaps with many different audio effects – and then mixed together, there is the potential to cause comb filtering (Katz, 2002, p45). Comb filtering occurs when out of phase frequencies are mixed together, the result being drastic cancellation of any out of phase frequency components. This can leave the mixed audio sounding hollow and incomplete. It is impossible to anticipate comb filtering without the use of advanced signal analysis tools. A trained ear can identify comb filtering, but an untrained ear will just hear poor audio. For this reason, it is wise to avoid unnecessary effects processing whenever possible.

EQ impact on headroom and overload

As with all active amplification processes, incorrectly applied equalisation can induce overload and clipping at certain frequencies. Indeed, music producers often emphasise that equalisation should generally be used to cut or reduce the effect of problem frequencies rather than using EQ for boosting weak frequencies (White, 1997). The key with any amplification process is to ensure that headroom is sufficiently available to avoid clipping. Katz (2002, p67) explains that amplification circuits sound “pretty nasty” when used near their overload level, and perhaps the extra headroom provided by high voltage valve circuits is a key reason for valve amplifiers being preferred above transistor systems by many music producers.

Low bass frequencies can cause considerable problems, as the human ear becomes much less sensitive as the frequency gets lower. So bass boost EQ systems should be used with caution and with a high quality monitoring system to avoid creating “serious problems” (Katz, 2002, p106). Even a dry signal can contain low frequency overloads that go unnoticed on a poor quality monitor system. Such signals could be induced by sub-harmonics of a musical instrument or indeed distant traffic rumble or building vibrations which are picked up by sensitive microphones. It may therefore be necessary to use low-cut filtering to remove high energy levels which are apparent below the musical range (i.e. below 40 Hz) to improve the overall sound.

Bob Katz (2002, p63) further explains that EQ and filter systems can still cause overloads even when the filter is set to cut or attenuate. Both digital and analogue filters have their own resonant characteristics which can induce ringing to an audio signal. The result here is that cutting a frequency with a particular equaliser can cause ringing at a different frequency, hence inducing a boost which may cause overload, as Katz explains:

Contrary to popular belief, an over (clipping) can be generated even if a filter is set for attenuation instead of boost, because filters can ring; they also can change the peak level as the frequency balance is skewed. Digital processors can also overload in a fashion undetectable by a digital meter.

The reason for digital systems having additional overload issues is that the mathematical processes involved can create internal truncation and quantisation (rounding) of data before it is output from the device. At each stage of internal digital processing, dithering should be used to reduce truncation noise. However, many low-standard devices do not provide extensive mathematical dithering and the result can be an output signal which appears to be in range, but which has experienced clipping and overload internally.

Bypass effects

In outboard hardware, it is quite rare to find a ‘true-bypass’ system. This can also be the case with digital software processors. This means that if the EQ is connected, even if each gain setting is at zero, or the EQ is ‘bypassed’, there will still usually be some form of subtle alteration to the output signal. In the case of analogue systems, this can be because the audio still passes through the transistors and components in the circuitry, even if the components are not activated to process the signal. Nevertheless, simply passing audio through the circuit components will have a small but noticeable effect on the audio. For this reason if EQ or any other effect process is to be bypassed, it is recommended that the circuitry is totally removed from the signal chain (Owsinski, 2000, p25).

The precious midrange

Harmonic weakening

It is well reported that the musical midrange frequencies (150 – 1000 Hz) are notoriously delicate when equalisation is used (Huber & Runstein, 2005, p452). Indeed this is because the frequencies in question include the fundamental pitches in the musical range of many popular instruments. Manipulating frequencies within the midrange can have a major effect on the musicality of individual instruments. The use of EQ to enhance timbral qualities such as perceived warmth, presence and depth is certainly a valuable exercise. However, if this process impacts the balance of pitch within a musical phrase, the result can become detrimental to the quality of the processed audio.

A simple example is to look at a single musical note and the effect of EQ enhancement within the musical range. Figure 2 shows the frequency spectrum of an E4 note played on guitar, and that of the same signal boosted parametrically around the fundamental pitch of 329.6 Hz. Adding EQ boost at such frequencies might be employed to make the guitar appear more prominent within a complete mix. However, it can be seen from Figure 2 that the major effect is to reduce the harmonic balance of the note being played. The relative density of higher frequency harmonics of the guitar note shown are ultimately weakened by boosting at the fundamental. This results in a less musical sound which more closely represents a single synthesised tone than a rich harmonic note from the guitar it was originally created with.

Figure 2

High-Q effects

Using parametric EQ within the midrange can also have an adverse effect on the musicality of the processed audio if the Q setting is high. In general, a broad EQ band in the midrange gives a more natural and musically pleasing result, whereas use of a narrow EQ peak can easily upset the tonal balance (www.mackie.com, 2007). If the Q setting of any midrange parametric EQ is too high, then it is possible to adversely degrade the musical make up of a collection of notes.

For example, Figure 3 shows the frequency spectra for raw and equalised guitar audio. In this example of an E major chord played on guitar, the processed audio has had narrow parametric attenuation (-18db, Q=10) at the frequency of the musical third (G#3, 208 Hz). It can be seen that the processed audio has a reduced G#3 component power which causes the processed audio to sound more like an E5 chord (major third removed) than the original E major chord.

The E5 guitar chord is often referred to as a ‘power’ chord because of its regular use in heavy rock music. A producer should therefore be very careful with EQ use in the midrange frequencies because it has the capability to alter the balance of pitch within a musical phrase to the extent that the identification of the processed audio, for specific genres of music, might become misinterpreted.

Figure 3

Tingen (2007) reports that engineer Tom Elmhirst uses a number of very high Q attenuators to reduce some particularly hard frequencies evident in a vocal track. In particular, a notch attenuator of -18dB at 465 Hz, with a Q ratio of 100, is used. This notch EQ is used to mold and improve the overall tonality of the vocal recording. However, the fundamental pitch of musical note A#4 is at 466 Hz and the relative power of this note will certainly be affected by the EQ filter. The filter width is narrow enough to have no major effect any other musical frequencies within the midrange. As a consequence, the notch filter will effectively reduce the harshness of the vocalist’s voice. But, if the vocal melody includes use of the A#4 note, then there would certainly be a significant attenuation for the period of that particular note. Tingen explains that this corrective treatment was necessary owing to sonic issues with the recorded vocal track. In this case, the required ‘fix’ highlights the compromises which must be made when corrective treatment is added at a late stage in the production. Many digital software EQ systems allow such extreme settings to be utilised. In many cases, however, use of such extreme settings indicates a flaw in the production process at an earlier stage.

It is, similarly, possible with high Q parametric EQ to accentuate frequency components that are not specifically in key with the music in question. Consider a complex audio signal that contains an instrument playing a continuous note, say A4 which represents a fundamental frequency of 440 Hz. In reality the audio signal would contain a whole spectrum of frequency components that are available for manipulation by EQ. If a mix engineer were to totally neglect the frequency of the note being played and add a very narrow parametric EQ to enhance the signal at 430 Hz, then a pitch bending effect could result. If the signal was enhanced enough at 430 Hz, then it could feasibly become more powerful, or stronger, than the frequency of the note being played (in this example 440 Hz). The result could be that the A4 note appears to be out of tune or out of key with the complete audio signal.

If mixed audio is processed with high Q equalisation in the midrange frequencies then it is also quite possible to accentuate frequencies that are not in key with the musical piece in question. This can have similar detrimental effects to those highlighted and can also induce resonances which are musically displeasing.

Equalisation of transient waveforms

Discussing the ‘envelope’ of transient data

Equalisation can be applied to transient waveforms to enhance particular sonic characteristics. A transient data signal is one which occurs owing to a sudden impulse or impact and exists for only a finite period of time; that is to say a transient signal decays over time (Thomson, 1993, p92). A transient waveform therefore represents captured data of a transient nature. Most natural musical sounds decay to nothing over time – some faster than others. Where the entire musical signal is to be discussed as a whole, this can be referred to as transient or ‘envelope’ analysis.

Envelope analysis is discussed with the aid of detailed waveform analysis of many instruments by Howard and Angus (2006, p216-226). It can be seen that the attack or onset of the music being played is a critical and defining attribute of the timbre of the reproduced sound. The onset of any sound is defined as the profile of the waveform during the time in which the sound appears and the time when it reaches its highest amplitude (see Figure 5.1). In listening tests, if the onset of a musical sound is removed, it can sometimes become very difficult to identify the instrument being played (Masri and Bateman, 1996).

Many instruments and musicians have the ability to produce a steady-state envelope period for as long as desired. For example, a trumpeter can decide whether to play a short burst of sound for less then a second, or a sustained note over a much longer period of time. Once the musician completes their action, the transient enters its decay phase and the sound excitation falls back to the level of the ambient noise floor.

In contrast, many percussive instruments have little or no steady-state or sustain period. Once the sound has reached its highest level, the sound is immediately into its offset phase and the waveform decays away. In the case of percussion instruments a fast attack can produce a desirable ‘snap’ in the sound.

Experimenting with ‘absent’ frequencies

It has been stated previously that EQ is used to manipulate the effects of frequencies present in a section of audio. However, we have discovered historically that EQ can also be used to enhance frequencies that were never particularly apparent in the first place. The term ‘absent’ frequencies will be used here to describe frequencies outside the principal fundamental and harmonic frequencies of the instrument in question. In certain cases, subtly enhancing absent or weak frequencies can add a somewhat indescribable improvement to the produced audio. Two examples here are boosting the upper-midrange of a bass drum sound to improve the attack and add ‘snap’ or ‘click’ to the sound or adding high frequency ‘air’ to a vocal track. The scientific basis for this type of enhancement should be investigated however, in order to fully understand the implications of such EQ techniques.

A transient kick drum waveform and its corresponding frequency spectrum is shown in Figure 4. The frequency spectrum has been plotted on two different scales to help identify the following observations:

- The fundamental frequency of the kick drum is approximately 50 Hz

- The most dense frequency excitation occurs between approximately 40 Hz and 220 Hz

- Distinct but low levels of frequency excitation are apparent in the band 220 Hz – 1500 Hz

- There is very little frequency content in the kick drum signal above 1500 Hz

The following can also be observed from the time domain plot:

- The attack period lasts for approximately 10 ms

- The decay period lasts approximately 200 ms

It is quite difficult to specify exactly what the decay period is without first defining the ‘end’ of the waveform. However, the exact definition of the decay period is not required for this type of analysis, so a value of 200 ms is a suitable approximation.

Figure 4

An ‘enhanced’ or ‘snappier’ transient onset usually refers to a more rapid attack rise or a shorter attack time. Many popular texts suggest EQ boosting the kick drum in the 3-6 kHz region as a method of enhancing the attack of the signal or adding a ’snap’ to the sound – for example, White (2003, p70) and Robinson (2006). However, the frequency profiles shown in Figure 4, and those discussed by Rossing (2000, p34) suggest that there is little to be gained in boosting in this region, as there is very little content to enhance. The mechanical design of the bass drum does not lend itself to the excitation of very high frequency vibration components. So even if there is any recorded energy around the 3-6 kHz region, it may just be spill from the cymbals or buzz from the spring on the kick pedal.

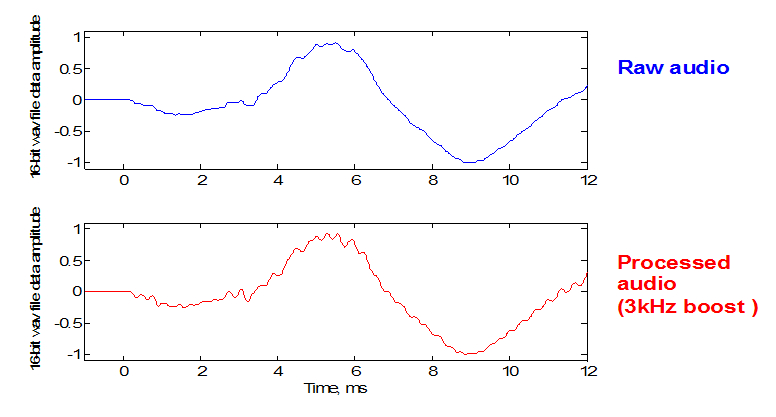

Figure 5 shows the actual effect of boosting at 3 kHz on the attack of the kick drum waveform.

Figure 5

It can be seen that the 3 kHz boost brings out a sinusoidal high frequency component in the signal. However, the time taken for the signal to reach its peak value (the attack time) has not actually been affected at all. Furthermore, it can be shown that the 3 kHz component extends and is evident for the entire signal in the processed audio – whereas the actual intention of this high frequency boosting technique was to only alter the attack or initial period of the kick drum waveform.

It appears that the addition of a high frequency component to the signal gives a psychoacoustic enhancement to the attack of a waveform. That is to say that adding this component makes us think that the attack is more rapid or has been sharpened. A similar psychoacoustic effect is heard when adding high frequency ’air’ to a vocal track.

Historical experience and simple listening tests can show that this technique does indeed improve our perception of the attack of the signal, but care must be taken to ensure that the rest of the waveform does not become degraded at the expense of this improvement. The perceived sound of the kick drum may well have been improved for the initial 10-20 ms onset period, but there is now an unwanted 3 kHz frequency component evident in the other 200 ms of the kick drum decay. Again, simple listening tests will show that it is possible to enhance the attack of the signal, but in many cases this technique can quickly manifest itself as an intolerable high frequency hum on the signal – not to mention the phase distortion and headroom issues also introduced by the use of EQ. Furthermore, the 3 kHz component will become significant and evident within the noise floor even when the instrument is not being played. Careful gating can reduce this particular effect, but ultimately this involves adding another audio processor to the signal chain, reducing the final audio quality further still.

It is suggested that to actually enhance the attack of a transient signal by a more effective method, the aim should be to increase the rate-of-change of the waveform in the initial onset period; to cause the attack and the onset of the waveform to occur more quickly. Like many enhancements made to recorded audio, this can be done best and with the least effect on the mixed audio by physically improving the sound of the instrument before it is even recorded. With a kick drum it is possible to increase the attack time and improve the ‘snap’ of the signal by altering the vibratile properties of the impacting materials. A soft kick pedal impacting a slack drum head will undoubtedly give a relatively slow attack. If the kick beater is a hard material and the specific point of impact is also hard, the impulse and change of momentum will occur more quickly, so the attack will be enhanced. It is therefore quite possible to enhance the attack of a transient instrument by carefully considering the set-up prior to recording, as discussed by White (2003, p71).

Time domain processing

If audio processing is to be used to enhance the attack of the transient, then it is suggested that manipulation of the signal can be performed without the need to rely on frequency or spectrum based effects. After all, the signal property to be enhanced is the dynamic waveform amplitude, i.e. the rate-of-change (the attack) of the signal. Dynamic processing algorithms do not have the heavy computation overhead of frequency based methods. Furthermore, the phase distortion and induced noise issues encountered with filtering techniques are avoided.

It is possible to identify dynamic and time based processing techniques for enhancing the attack period of a transient waveform. Two options for enhancing the attack of a transient signal are pitch shifting and compression. However, standard pitch shifting and compression devices are insufficient for this purpose because they generally process the whole audio signal. Whereas enhancing the attack of a transient signal requires only the first 10-20ms to be manipulated. A technique described by Oswinski (1999, p55) does discuss a method to isolate the attack period of the signal for separate processing. The method uses a short release gate on a duplicate channel to isolate the attack profile of the waveform, which can then be processed separately and mixed back with the original signal.

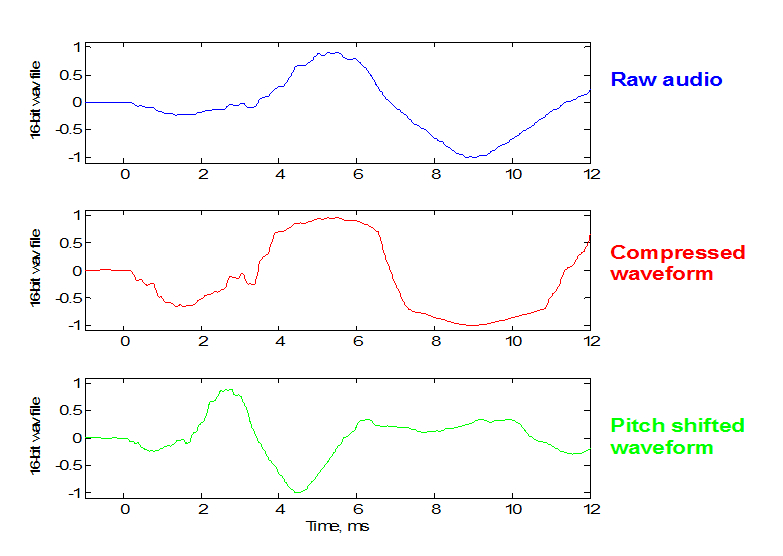

For interest, examples of compression and pitch-shifting techniques are applied to the previously discussed kick drum waveform, to highlight the applicability of time based processing methods for increasing the rate-of-change of a transient attack period. Figure 6 shows that the rate of change of the waveform can be increased by using heavy compression. Comparison between the raw and compressed waveforms at approximately 7ms shows how the waveform gradient becomes steeper owing to compression. Furthermore, this type of compression acts as controlled distortion, which generates musically related harmonics of the original waveform. Of course the compression is not required for the decay period of the signal, so a standard compressor system would not be sufficient to achieve this processing.

The pitch-shifted signal (using a simple time based resampling technique) also increases the rate of change of the signal during the attack period. Furthermore, the peak amplitude is reached within a much shorter timeframe. However, this approach alters the complete frequency makeup of the waveform, so, as with the compression technique, it is not desired to continue pitch-shifting for the duration of the entire waveform. As a result, although extra hardware and signal gating may be required, it is suggested that the methods described here are considered rather than simply reaching for the EQ to solve all post-production mix issues.

Figure 6

Discussion and conclusions

The addition of EQ to recorded audio has a compromised effect on the reproduced sound. Adding a little EQ at the expense of a little phase distortion is often more than acceptable. However, there are many common cases where EQ is used purely because it is the best known solution to the production engineer. It is possible to record quality audio tracks without needing to use heavy equalisation. Indeed, if the audio sounds good live, then the need for EQ at the mixing stage would indicate a limitation in the recording setup. It has been discussed that, wherever possible, spectral issues should be minimised at the recording stage by practical methods. Accepting an imperfect take with the intention to ‘fix it in the mix’ inevitably leads to further inaccuracies and quality losses being introduced.

It is accepted that the perfect recording setup is rarely achievable, so often some degree of signal manipulation will be required at the mixing stage. It is an easy option to dive in with some form of EQ enhancement, but the negative impacts of over-equalising audio is often overlooked. This can sometimes be because the producer is so focused on improving the specific element of the sound in question, that it is possible to neglect the artefacts created elsewhere in the audio. The use of EQ in the musical midrange frequencies has been shown to be a delicate issue, with high Q parametric EQ having the ability to unbalance the tonal makeup of specific musical sounds. Furthermore, the use of EQ to enhance dynamic transient waveforms can be at odds with certain signals. A dynamic time based signal with the desired fundamental frequency content is often best enhanced by time domain manipulation as apposed to recursive frequency based manipulation.

As technologies in audio develop, the fact remains that the producer still has the ability to counteract all of the benefits gained by working with advanced equipment at high resolutions, bit rates and sampling frequencies. One simple oversight with an incorrectly applied EQ can reduce musicality and generate more noise and phase distortion than a modern processor can ever overcome. Indeed, it could be argued that the low noise qualities of digital recording systems mean that producers should be even more careful with post processing, as any errors are likely to become more obvious. Modern EQ systems allow exceptionally tight frequency bands (up to Q=100) to be manipulated by levels up to and exceeding 20dB. If an EQ system needs to be used at these extreme settings, this could feasibly indicate some kind of error or oversight at the recording stage.

Similarly, as advanced audio technology becomes increasingly available to home producers, the issue of education becomes much more important. It has been discussed previously that improving education and knowledge transfer is essential to ensure that consumers and upcoming producers appreciate the skill and technique involved in the audio production process (Toulson, 2006, 2008). It is felt that advanced processing technologies should not be used as a replacement for quality audio recording techniques, and advanced processing techniques should not take the place of humanistic studio skills. Moreover producers and engineers should embrace the advances in technology in order to enhance existing skills and so achieve quality audio reproduction

Bibliography

Bohn, D. A. (2005) Constant-Q Graphic Equalizers, Rane Notes 101 &107 combined, http://www.rane.com/note101.html, accessed June 2007.

Boyd, J. (2006) White Bicycles: making music in the 1960s, Serpents Tail, London.

Gibson, D. (2005) The Art of Mixing. 2nd Edition, Artist Pro.

Hood, J. L. (1999). Audio Electronics, Oxford, Newnes Publishing.

Huber, D. M. & Runstein, R. E. (2005) Modern Recording Techniques, 6th Edition, Oxford, Focal Press.

Katz, B. (2002) Mastering Audio, Focal Press.

Masri, P. and Bateman, A. (1996) Improved Modelling of Attack Transients in Music Analysis-Resynthesis. Proceedings of the International Computer Music Conference (ICMC), 1996.

Mellor, D. (1995) EQ: How and when to use it, Sound on Sound, March 1995.

Miller, R. (2005) Second-Order Digital Filtering Done Right, RaneNote 157, http://www.rane.com/note157.html, accessed June 2007.

Owsinski, B. (1999) The Mixing Engineer’s Handbook, Auburn Hills, MixBooks.

Owsinski, B. (2000) The Mastering Engineer’s Handbook, Auburn Hills, MixBooks.

Robinson, A. (Editor) (2006) Creative EQ. Computer Music Special, Volume 19: The Essential Guide to Mixing, p30-35.

Rossing, T. D. (2000) Science of Percussion Instruments, Singapore, World Scientific Publishing.

Rumsey, F. and McCormick, T. (2006) Sound and Recording, 5th Edition, Oxford, Focal Press.

Tingen, P. (2006) Jim Abbiss: Producing Kasabian and Artic Monkeys, Sound on Sound, September 2006, p100-106.

Tingen, P. (2007) Secrets of Mix Engineers: Tom Elmhirst. Sound on Sound, August 2007, p88-93.

Toulson, E. R. (2006) A need for universal definitions of audio terminologies and improved knowledge transfer to the audio consumer. Proceedings of the 2006 Art of Record Production Conference, Edinburgh.

Toulson, E. R. (2008) Managing Widening Participation in Music and Music Production, Proceedings of the Audio Engineering Society UK Conference, Cambridge, April 2008.

Walker, M. (2007) PSP Neon Linear Phase Equaliser Plug-ins, Sound on Sound, February 2007, p164-165.

While, P. (1997) Equal Opportunities. Sound on Sound, February 1997.

White, P. (2003) Creative Recording – part one, effects and processors, 2nd Edition, London, Sanctuary.

www.mackie.com (2007) http://www.mackie.com/technology/practicaleq_sm.html, accessed August 2007.